One of the most significant events in human history is the creation of Artificial Intelligence (AI). While 15 % of enterprises are already using AI, 31 % claim they will be using it within a year.

Not only AI transforms traditional computer methods but it also has an impact on various industries. The most affected industry, of course, is Information Technology. IT industry is all about systems and software, which makes Artificial Intelligence relatively more important in this sector.

Before discussing the “impact of AI” in Information Technology, let’s first know what exactly Artificial Intelligence is?What is Artificial Intelligence?

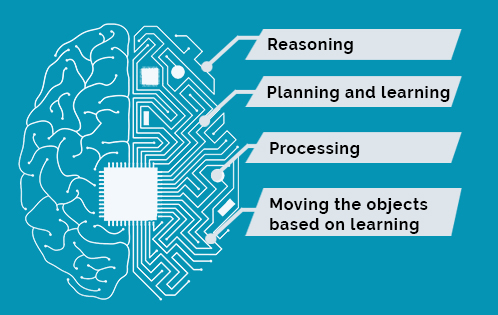

Artificial Intelligence is the creation of intelligent machines that act and function like humans. In this technology, the machines based perform the cognitive functions of humans like problem-solving and learning.

There are three different classifications of Artificial Intelligence: analytical, human-inspired, and humanized Artificial Intelligence in everyday life.

Artificial Intelligence works on algorithms and computer-based training that help solve complicated problems develop stimulations by learning in machines. The goals of AI include:

In the IT industry, Artificial Intelligence is changing the way software, and programs are developed. The software thus created not only solves a problem but also learns from the experience for future applications.

Table of Contents

Impact of Artificial Intelligence in Various Sectors of the IT Industry

Information Technology is using computer systems for storage, transmission, and processing of any data for any enterprise or organization. So Artificial Intelligence impacts the information technology industry in many ways.

Be it using voice recognition and virtual assistants or automating a work without human intervention, the Effects of Artificial Intelligence are everywhere in this industry.

Here are some of the major sectors of IT and the benefits of Artificial Intelligence on them:

Secure Systems

Confidential data is valuable to any organization and breach in this security system might cost a lot, especially to government organizations. Artificial Intelligence uses various algorithms to create layers of security within systems. AI defends not only the system of any potential threat but also repairs and learns from errors or vulnerabilities to protect the data from any future risk.

Automation

It is estimated that by the year 2025, AI and automation will replace 7 % of jobs in the US itself. Automation carries out any type or amount of work without human interference. Organizations are adopting automation at a fast rate as it is cost-effective. The AI algorithm based automation adjusts according to the company’s needs and also learns from past errors.

Productivity

Coding for programmers is a tricky business and requires a lot of hit and runs. Artificial Intelligence provides suggestions to coders, ultimately increasing productivity and reduces the downtime

Processing Power

Computers have improved and advanced since the time they first came into action. Artificial Intelligence not only increases the processing speed but also handle data like a human brain.

Quality Assurance

Quality Assurance is the use of accurate tools in the development of software. By using AI video generator tools, the developers can fix issues and prepare them for future bugs or fixes. One such example of an AI tool is ‘Bugspots’ that is effective in removing viruses without the help of humans.

Conclusion

Artificial Intelligence is the recent critical development in the Information Technology Industry. It helps create more reliable software and programs which are cost-efficient and error-free. Developers have used AI in carrying out the most complex operations and expand their range of vision.

Both based on computer science, Artificial Intelligence and Information Technology; go hand in hand in making changes in the operation of various sectors including programming, planning, quality assurance, productivity, and automation. We can enhance machine and human capabilities using AI in Information Technology.

It’s the right time to start a career in “Artificial Intelligence Certification course”

About the author